This release includes model weights and starting code for pre-trained and fine-tuned Llama language models ranging from 7B to 70B parameters. 26 2024 We added examples to showcase OctoAIs cloud APIs for Llama2 CodeLlama and LlamaGuard. . This repository contains an implementation of the LLaMA 2 Large Language Model Meta AI model a Generative Pretrained Transformer. We will use Python to write our script to set up and run the pipeline..

Under Download custom model or LoRA enter TheBlokeLlama-2-7b-Chat-GPTQ To download from a specific branch enter for example TheBlokeLlama-2-7b. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. MODEL_ID TheBlokeLlama-2-7b-Chat-GPTQ TEMPLATE You are a nice and helpful member from the XYZ team. If you want to run 4 bit Llama-2 model like Llama-2-7b-Chat-GPTQ you can set up your BACKEND_TYPE as gptq in env like example env7b_gptq_example. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters..

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Alright the video above goes over the architecture of Llama 2 a comparison of Llama-2 and Llama-1 and finally a comparison of Llama-2 against other. WEB The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Open Foundation and Fine-Tuned Chat Models Last updated 14 Jan 2024 Please note This post is mainly intended for my personal use. WEB Our pursuit of powerful summaries leads to the meta-llamaLlama-27b-chat-hf model a Llama2 version with 7 billion parameters However the Llama2 landscape is vast..

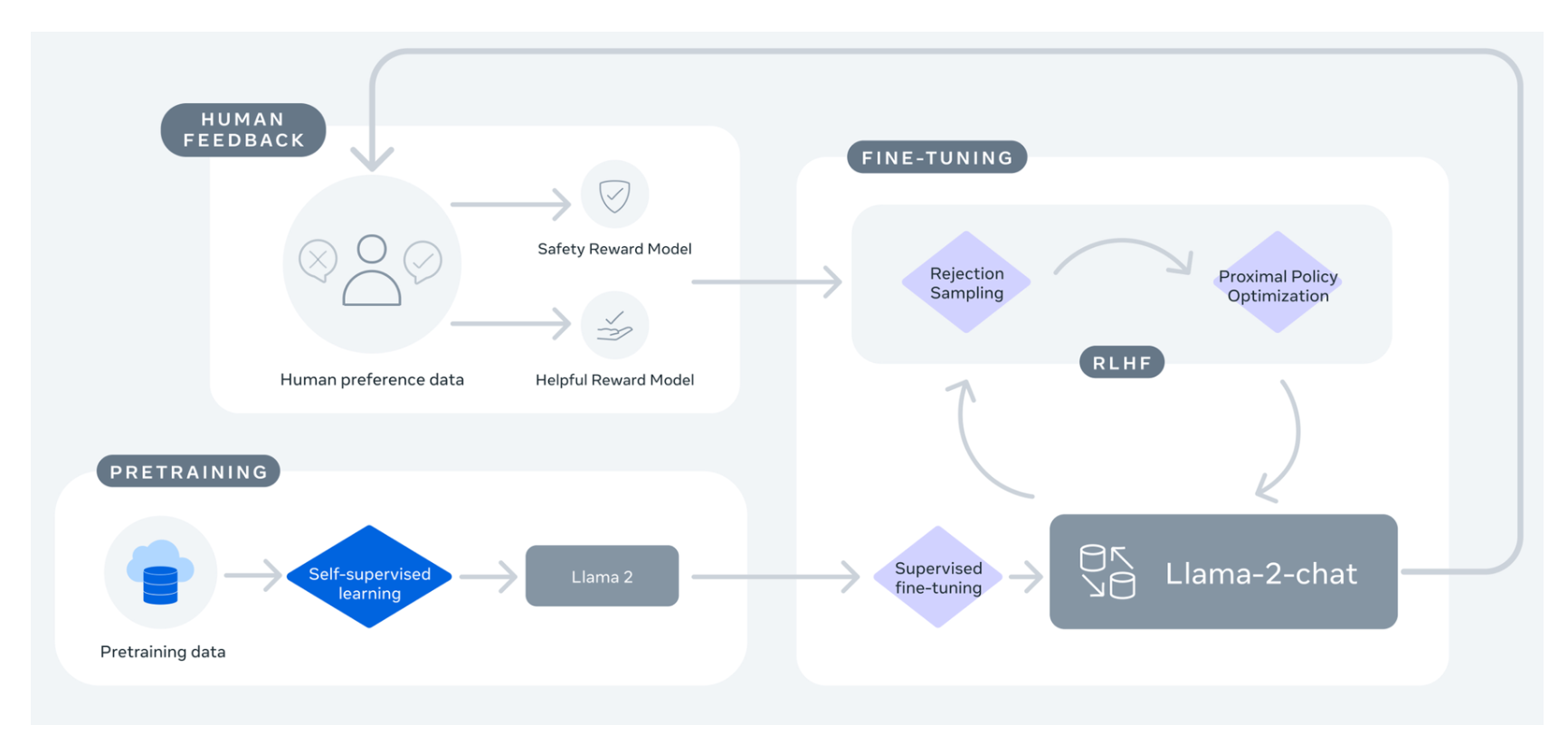

Llama 2s fine-tuning process incorporates Supervised Fine-Tuning SFT and a combination of alignment techniques including Reinforcement Learning with Human. In the dynamic realm of Generative AI GenAI fine-tuning LLMs such as Llama 2 poses distinctive challenges related to substantial computational and memory requirements. Key Concepts in LLM Fine Tuning Supervised Fine-Tuning SFT Reinforcement Learning from Human Feedback RLHF Prompt Template. It shows us how to fine-tune Llama 27B you can learn more about Llama 2 here on a small dataset using a finetuning technique called QLoRA this is done on Google Colab. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations..

Comments